Google's Gemini 3 Pro Redefines AI Benchmarks, Surpassing GPT-5.1

Just this evening, Google released Gemini 3 and its Pro version, pushing humanity another significant step toward Artificial General Intelligence (AGI). This major update intensifies the ongoing race among tech giants to achieve true general intelligence.

The announcement prompted a notable public exchange between OpenAI's Sam Altman and Google's Sundar Pichai, underscoring the competitive spirit driving the industry.

So, how does this new model perform? An analysis of key industry leaderboards provides a clear picture.

A New Leader on the Arena

Gemini 3 Pro has claimed the top spot on the highly-regarded LMSYS Chatbot Arena with a record-breaking 1501 Elo score, a platform that evaluates models based on human-preference ratings.

This is not a marginal improvement. Gemini 3 Pro leads across nearly every sub-category, often by a substantial margin. More importantly, it dominates on the benchmarks that truly matter for AGI progress.

Beyond Standard Metrics: The AGI-Focused Benchmarks

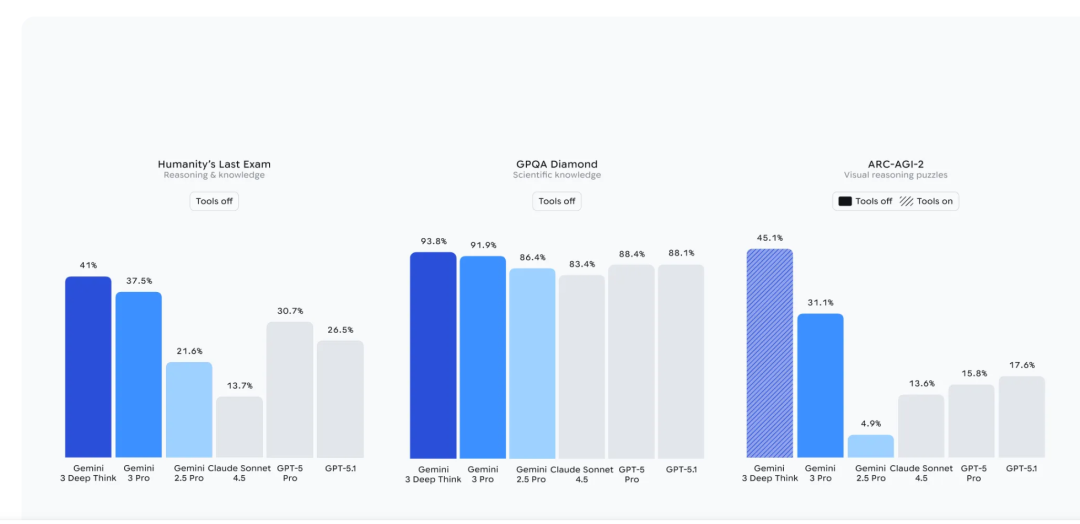

What makes this achievement particularly noteworthy are the specific benchmarks where Gemini 3 Pro excels. These are not standard tests of isolated skills but complex evaluations specifically designed to measure progress toward AGI. Two benchmarks are especially telling: Humanity's Last Exam and ARC-AGI.

-

Humanity's Last Exam: This demanding multimodal benchmark consists of 3,000 high-difficulty questions designed to test true general intelligence. If MMLU is the AI equivalent of the SATs and AIME is like a competition, then Humanity's Last Exam is the ultimate test for AGI—a modern successor to the Turing Test for today's large models. Gemini 3 Pro scored 37.5% compared to GPT-5.1's 26.5%, a substantial lead that demonstrates meaningful progress toward general intelligence.

-

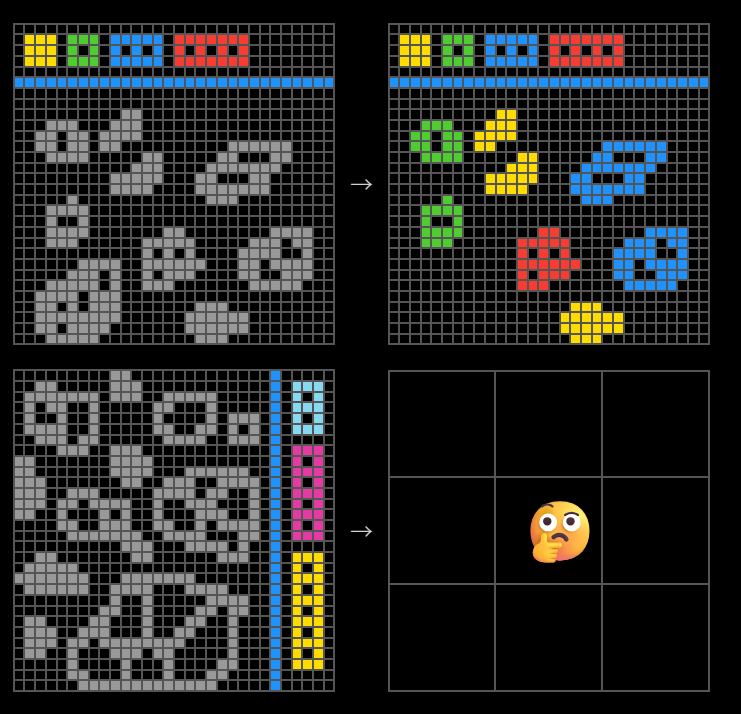

ARC-AGI: This benchmark, originating from a notoriously difficult Kaggle competition by Keras creator François Chollet, tests abstract visual reasoning through pattern recognition puzzles. These aren't tasks that can be solved through memorization—they require true generalization and reasoning. Gemini 3 Pro achieved 45.1% with code execution, while its Deep Think mode performs even better.

These are problems that present a formidable challenge even for advanced AI models.

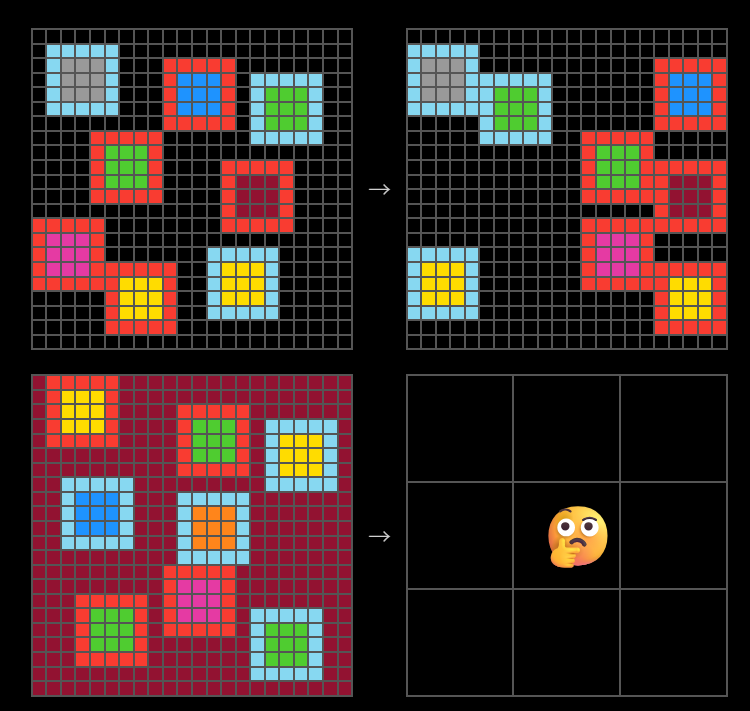

While a human might reason through the logic in the image above, the next example is far more complex.

This type of puzzle often stumps human problem-solvers. The performance gap between models on such tasks remains wide, highlighting the difficulty of true abstract reasoning.

The key takeaway is that Gemini 3 Pro is not merely making incremental progress; it is establishing a new performance ceiling on benchmarks specifically designed to measure generalizable intelligence. In Google's own testing across 20 different benchmarks, Gemini 3 Pro achieved the top score in 19 of them when compared to GPT-5.1.

Google itself is emphasizing these benchmarks as primary indicators of its progress toward AGI, highlighting them as the most meaningful measures of advancement.

From Benchmarks to Breakthrough Capabilities

While benchmark scores are indicative, the true measure of a model's power lies in its application to complex, real-world tasks. Let's examine a head-to-head comparison with OpenAI's latest GPT-5.1.

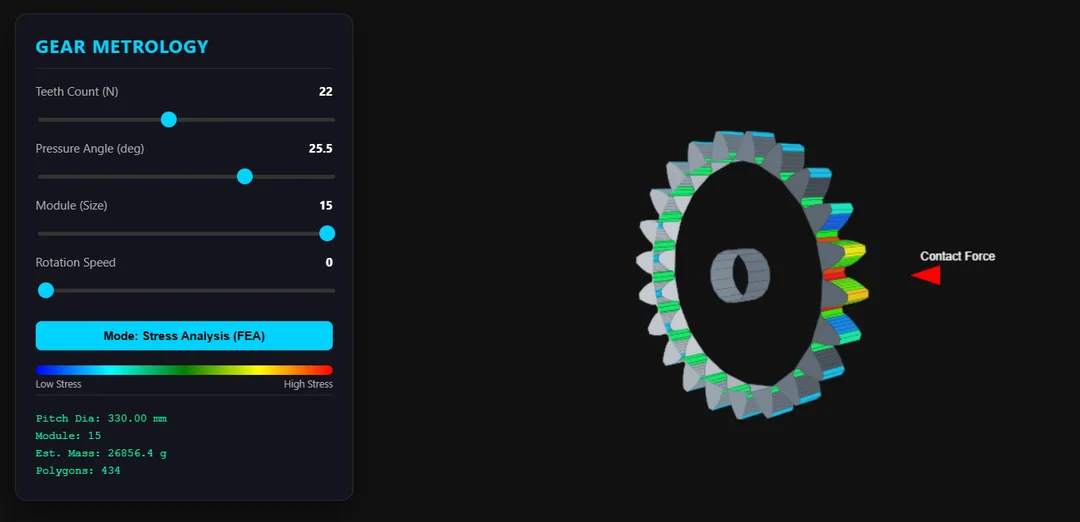

The challenge: generate a complex 3D spur gear visualization using only mathematical formulas, with no external libraries. The model must also perform stress and contact analysis.

prompt: Create the best visualization of a spur gear in 3D possible, without external libraries. It should be fully math-based and include a stress analysis and contact analysis.

GPT-5.1 took a full 7 minutes to analyze and produce its result:

In stark contrast, Gemini 3 Pro completed the same task in just 30 seconds. The qualitative difference is immediately apparent: the rendering of the gear tooth profile, the lighting effects, and the perspective all demonstrate a significantly higher level of sophistication. This comparison makes GPT-5.1's output look noticeably crude by comparison.

Another impressive demonstration involves the following prompt: create a cloud-based OS with basic functions like an interactive UI, a text editor, and a web browser. The OS must access the live internet, set Wikipedia as its homepage, and feature a user-friendly, aesthetic design.

The result was a functional, self-contained operating system generated from scratch, capable of browsing the live internet. This 'browser in a vat' concept showcases the model's ability to create complex, interactive systems on command.

The 'Model is the Platform' Paradigm

The capabilities demonstrated by Gemini 3 signal a fundamental shift in the AI development landscape. We're entering an era where "the model is the platform"—AGI systems become universal problem-solvers. This paradigm shift allows developers to focus on prompt engineering rather than building specialized software stacks, as illustrated by this classic industry diagram:

This trend has significant implications for specialized applications and the practice of fine-tuning.

As general-purpose models approach AGI, they are likely to absorb capabilities that currently require niche applications. The 'general' aspect of AGI means a single, powerful tool can be prompted to solve a vast array of problems. This shift is reflected in the current technology windfall: salaries for new graduates in foundational model R&D are soaring, attracting top talent away from business applications and toward core research.

Gemini 3 Pro API Pricing and Availability

Google has released the Gemini 3 Pro API with competitive pricing tiers designed for various use cases. The model is available through both Google AI Studio and Vertex AI.

Standard Tier Pricing (per 1M tokens):

- Input (≤ 200K tokens): $2.00

- Output (≤ 200K tokens): $12.00

- Long context input (> 200K tokens): $4.00

- Long context output (> 200K tokens): $18.00

Context Caching:

- Cache read (≤ 200K tokens): $0.20 per 1M tokens

- Cache read (> 200K tokens): $0.40 per 1M tokens

- Cache storage: $4.50 per 1M tokens per hour

Batch API (50% discount):

- Input (≤ 200K tokens): $1.00 per 1M tokens

- Input (> 200K tokens): $2.00 per 1M tokens

- Output (≤ 200K tokens): $6.00 per 1M tokens

- Output (> 200K tokens): $9.00 per 1M tokens

With a massive 2 million token context window, Gemini 3 Pro can handle extremely long documents and multi-turn conversations. The context caching feature provides significant cost savings for applications that reuse common prompts or knowledge bases.

Developers can access the API through Google AI Studio or Vertex AI. Google is offering a preview period with generous free tier limits to encourage adoption and testing.

The Road Ahead

The release of Gemini 3 Pro is more than an incremental update; it represents a significant leap in reasoning capabilities and multimodal understanding. By achieving record scores on AGI-focused benchmarks and outperforming GPT-5.1 on 19 out of 20 tests, Google has demonstrated clear leadership in the race toward AGI.

The pressure now mounts on competitors like OpenAI and Anthropic to respond. As these tech giants continue to push boundaries, the pace of innovation toward true general intelligence is accelerating at an unprecedented rate.

Key Takeaways

• Google's Gemini 3 Pro achieves record 1501 Elo score on LMSYS Arena, surpassing all competitors. • Gemini 3 outperforms GPT-5.1 on 19 of 20 benchmarks, with major leads on AGI-critical tests like Humanity's Last Exam (37.5% vs 26.5%). • The model demonstrates practical superiority, generating complex 3D visualizations in 30 seconds vs GPT-5.1's 7 minutes. • API pricing starts at 12.00 per 1M output tokens, with 50% batch discounts and context caching available. • With 2 million token context window, Gemini 3 Pro handles extremely long documents and multi-turn conversations. • This release marks a significant step toward AGI, with Google emphasizing benchmarks that measure true general intelligence.