Ready to build with multi-agent AI but overwhelmed by the sheer number of frameworks? You're not alone. Choosing the right multi-agent AI framework is the critical first step toward success. To cut through the noise, we've benchmarked the top multi-agent frameworks, sorting them into three distinct tiers—Learning, Development, and Production—to help you find the perfect fit. Whether you're a student exploring core concepts, a developer prototyping the next big thing, or an enterprise architect building a production-grade system, this guide provides the clarity you need to select the best AI agent framework for your project.

A quick note on these categories: they're flexible. A powerful production framework can be used for learning, but a simple learning tool won't scale for a high-stakes production environment.

Let's dive into our comparison of the best multi-agent AI frameworks.

Tier 1: Top Multi-Agent Frameworks for Learning

These frameworks are perfect for educational purposes, experimentation, and getting started with multi-agent concepts. They are ideal for anyone new to agentic workflows.

Labels: "Ideal for Learning", "Beginner-Friendly", "Experimental Framework", "Rapid Prototyping Tool"

OpenAI Swarm: A Beginner-Friendly Multi-Agent Tool

Project URL: https://github.com/openai/swarm

Pros:

- Feather-light and Beginner-Friendly: Swarm is built on a minimalist philosophy. With just two core concepts (Agent and Handoff), the learning curve is incredibly gentle. You can implement task handoffs between agents using simple Python scripts.

- Transparent and Controllable: Swarm gives you fine-grained control over context, tool calls, and task flows. Since almost everything is handled client-side with no server state, debugging is a breeze.

- Open-Source and Modular: As an open-source project, you can freely modify the core logic and integrate custom tools through its flexible function-calling mechanism.

- Stateless and Efficient: Built on the stateless Chat Completions API, Swarm consumes virtually no extra memory at runtime, making it ideal for quick prototypes and small-scale experiments.

- Education-Oriented Design: It comes packed with a rich set of examples—from customer service bots to virtual classroom assistants—that clearly illustrate multi-agent collaboration patterns.

Cons:

- Not Production-Ready: Swarm is still experimental, and OpenAI explicitly advises against using it in production. It also lacks any form of persistent state management.

- Locked into the OpenAI Ecosystem: It only supports the OpenAI API, meaning you can't integrate other large language models (LLMs) or use locally deployed models.

- Limited Flexibility for Complex Workflows: Compared to more advanced frameworks like LangGraph, implementing complex, multi-step agentic workflows is challenging, and it struggles with tasks requiring long-term memory.

Tier 2: AI Agent Frameworks for Development & Prototyping

Ready to build and test real applications? These development-focused AI agent frameworks offer more power and flexibility for robust prototyping.

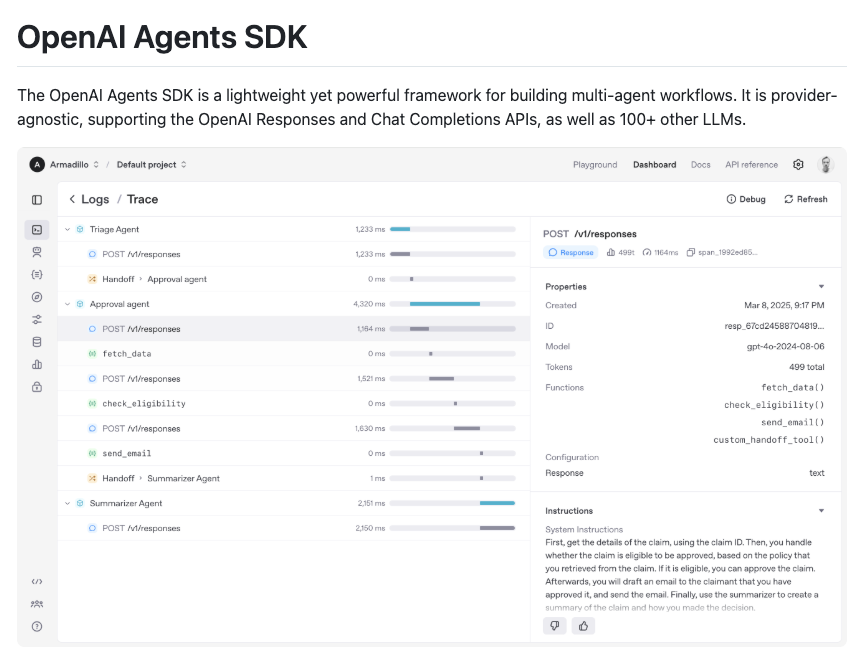

OpenAI Agents SDK: Prototyping Multi-Agent Collaboration

Labels: "Python-First Experimental Framework", "Multi-Agent Collaboration Prototyping", "Intermediate Developer Tool"

Project URL: https://github.com/openai/openai-agents-python

Pros:

- Intuitive Python-First Design: You can orchestrate agents using native Python syntax without learning complex new abstractions. It offers out-of-the-box support for tool calling and multi-agent collaboration, perfect for rapid prototyping.

- Flexible and Extensible: The SDK supports deep customization of agent logic and allows you to integrate any custom Python function as a tool. It's also compatible with third-party models from Anthropic, Llama, and others, helping you avoid vendor lock-in.

- Built-in Multi-Agent Collaboration: The

Handoffsmechanism enables dynamic task allocation between agents, supporting complex workflow orchestration. The built-inAgent Loopautomates the entire tool-calling and feedback cycle. - Robust Developer Tooling: It includes a built-in Tracing system to help you visualize and debug workflows, Guardrails for input validation, and Pydantic for strong type checking to enhance application security.

Cons:

- Lacks Enterprise-Ready Features: It's missing key enterprise features like persistent storage (e.g., database integration) and optimized deployment paths that you'd find in frameworks like Google's ADK. Native security mechanisms like permission management are basic and require custom extensions.

- Still Maturing: While recent updates have improved documentation, monitoring, and session persistence, its stability in complex, large-scale production scenarios is still unproven.

LangChain-Chatchat: Building RAG for Intelligent Agents

While not a multi-agent framework at its core, LangChain-Chatchat is a powerful, open-source solution for building the RAG-based knowledge systems that intelligent agents rely on. It excels at creating private, data-sensitive knowledge bots.

Labels: "Enterprise-Grade Tool", "Developer-Friendly", "Private Deployment Solution"

Project URL: https://github.com/chatchat-space/Langchain-Chatchat

Pros:

- Modular and Open-Source: Built on the popular LangChain framework, it offers a fully modular pipeline. You can mix and match document loaders, text splitters, vector models, and LLMs to fit your exact needs.

- Built for Private Deployment: It's designed for data-sensitive scenarios, with full support for offline deployment of local LLMs (like ChatGLM and Qwen) and vector databases.

- Handles Diverse Document Formats: It's compatible with

.txt,.pdf,.docx, and more, providing a standardized process for building robust enterprise knowledge bases. - Vibrant Community and Ecosystem: As part of the LangChain ecosystem, it benefits from high community activity and can be extended with advanced features like Agentic workflows.

Cons:

- Complex to Configure and Tune: Performance is highly sensitive to the combination of embedding models, LLMs, and chunking parameters. Achieving optimal results requires significant tuning and experience.

- Performance Bottlenecks with Large Files: Uploading and vectorizing large files is slow. For example, a 100MB PDF can take several minutes to process, which can limit real-time applications.

- Effectiveness Hinges on Your LLM: The quality of the answers is directly tied to the capability of the underlying LLM. Smaller local models are often prone to hallucination or providing irrelevant responses.

- Potential for Instability: Some versions have known bugs, such as knowledge base matching failures, requiring rigorous testing before any production deployment.

Tier 3: Production-Grade Multi-Agent AI Frameworks

These frameworks are battle-tested and designed for building scalable, reliable, and large-scale AI applications for enterprise use cases.

Qwen-Agent: A Versatile Production Framework

Labels: "Production Framework", "Developer-Friendly", "Enterprise-Grade Tool"

Project URL: https://github.com/QwenLM/Qwen-Agent

Pros:

- Versatile, All-in-One Capabilities: Deeply integrates instruction following, tool use, planning, and memory into a single package. A simple plugin mechanism allows you to rapidly extend its functionality with custom tools for image generation, code execution, and more.

- Exceptional Long-Context Processing: It shatters traditional model limitations by handling ultra-long documents—from 8K to 1 million tokens—using chunked reading and RAG algorithms to maximize information retention.

- Production-Ready, Multi-Modal Architecture: Supports mixed text and image interactions and provides both low-level components for rapid prototyping and high-level abstractions for enterprise-grade applications.

- Streamlined Deployment: Offers one-click deployment via Alibaba Cloud's DashScope and supports self-hosting of open-source models. It also includes a GUI and Gradio for quick tool setup.

Cons:

- Default Security Risks: The code interpreter does not have sandboxing enabled by default, which poses a significant security risk in production environments.

- Tightly Coupled with Alibaba Cloud: Core functionalities are deeply tied to Alibaba Cloud services like the DashScope API, with few examples of third-party service integrations.

- Steep Learning Curve for Advanced Features: The documentation and examples for its multi-agent framework and advanced features (like hierarchical RAG) are sparse, making them difficult to master.

MetaGPT: SOP-Driven Multi-Agent Collaboration

Labels: "Production Framework", "Complex Task Collaboration", "Enterprise-Grade Development"

How it compares: Unlike conversational frameworks like AutoGen, MetaGPT uses a structured, SOP-driven approach that mimics a software development team. This makes it exceptionally good at generating complex, high-quality artifacts like code, system architecture diagrams, and requirement documents.

Project URL: https://github.com/FoundationAgents/MetaGPT

Pros:

- SOP-Driven Multi-Agent Collaboration: MetaGPT assigns roles (e.g., Product Manager, Architect, Engineer) to agents who follow Standardized Operating Procedures (SOPs). This structured approach breaks down complex tasks and reduces logical errors.

- Generates High-Quality, Structured Outputs: It produces professional-grade artifacts like requirement documents and system design diagrams. A shared memory pool ensures agents stay synchronized, boosting overall efficiency.

- Highly Extensible Architecture: You can easily add new agent roles to handle more complex tasks and integrate custom tools via its

ToolServerto adapt to diverse project needs. - Impressive Performance on Coding Benchmarks: It achieves a pass rate of over 85% on benchmarks like HumanEval and MBPP, significantly outperforming similar frameworks.

- End-to-End Software Development Simulation: MetaGPT can simulate the entire software development lifecycle, from requirements analysis to coding, testing, and deployment, making it a powerful tool for end-to-end project automation.

Cons:

- Rigid Role and Process Structure: The roles and SOPs are relatively fixed, making it difficult to dynamically add new roles (like a UI Designer) or adjust collaboration patterns on the fly.

- Prone to Resource Hallucinations: Agents occasionally reference non-existent files (like images or audio) or undefined classes, which can cause execution to fail.

- Resource-Intensive and Costly: It relies on high-performance LLMs like GPT-4. Complex tasks can trigger a cascade of API calls, leading to high operational costs.

- Heavy Reliance on

asyncio: Its deep dependency on Python'sasynciolibrary can cause compatibility issues in non-asynchronous environments and may limit parallel processing.

Dify: Low-Code Platform for AI Agent Applications

Labels: "Production Framework", "Low-Code Development", "Enterprise-Grade Application", "Rapid Construction"

Project URL: https://github.com/langgenius/dify

Pros:

- Powerful Low-Code/No-Code Platform: Dify's visual, drag-and-drop interface empowers non-technical users to build sophisticated AI applications like smart chatbots and content generators, dramatically lowering the barrier to entry.

- Vendor-Agnostic Model Support: It supports hundreds of commercial and open-source models (including GPT, Claude, and locally deployed LLMs), allowing you to switch providers and find the best cost-performance balance.

- Packed with Enterprise-Grade Features: It includes a built-in LLMOps toolchain (logging, monitoring, optimization), private deployment options, and data security features, making it a solid choice for medium-to-large enterprises.

- From Prototype to Production in Minutes: Dify can generate APIs and WebApps with a single click, providing an end-to-end workflow for quickly validating business ideas and integrating them into existing systems.

- Built for Data-Driven Optimization: It offers a powerful RAG engine, context management, and user feedback analysis tools to help you continuously iterate and improve your application's performance.

- Robust Agent and Tool Integration: You can define Agents using LLM function calling or ReAct and equip them with over 50 built-in tools like Google Search, DALL·E, and WolframAlpha, or add your own.

Cons:

- Customization Has Its Limits: While powerful, the platform's reliance on pre-built modules makes it difficult to implement highly customized algorithms or complex data processing flows.

- Performance is Tied to the Chosen LLM: The application's effectiveness is ultimately capped by the capabilities of the underlying large language model.

- Scaling Requires Infrastructure Planning: High-frequency API calls can create performance bottlenecks on a single node, requiring a distributed architecture (like a PostgreSQL cluster) for large-scale deployments.

- Steep Learning Curve for Some Features: While the basics are easy, advanced configurations and model integrations can be challenging for beginners. The documentation and developer ecosystem are still growing.

- Risk of Spiraling API Costs: When using third-party model APIs, high-frequency usage can lead to unexpectedly high bills, requiring careful cost management strategies.

BeeAI: A Modular Workflow Orchestrator

Labels: "Production Framework", "Enterprise AI Development", "Intelligent Workflow Optimization", "Modular Extension"

A quick heads-up: BeeAI is more of a powerful workflow orchestrator than a true multi-agent framework. This gives you granular control, which is often a better fit for real-world business processes.

Project URL: https://github.com/i-am-bee/beeai-framework

Pros:

- Flexible, Modular Architecture: BeeAI's modular design lets you assemble pipelines from components like natural language processing and data cleaning. It seamlessly integrates with mainstream tools like TensorFlow, PyTorch, and Hugging Face.

- Intelligent Task and Resource Scheduling: A built-in dynamic resource allocation algorithm optimizes execution order based on task priority and resource availability, making it highly performant in high-concurrency scenarios.

- Built for High-Performance Computing (HPC): It's compatible with HPC environments and can scale from a single node to hundreds, fully leveraging GPU/TPU acceleration for massive data processing tasks.

- Open-Source with Industrial-Grade Potential: As an open-source framework with detailed documentation, it encourages community contributions and custom extensions. It already supports MCP protocol tools and has more agent functionalities on its roadmap.

Cons:

- Steep Learning Curve for Distributed Systems: The framework's advanced concepts, like large-scale workflow management, require developers to have prior experience with distributed systems.

- Some Features Are Still Experimental: Advanced functionalities like the agent MCP are still under active development, and documentation for these features is limited.

- Nascent Community and Ecosystem: Compared to mainstream frameworks like LangChain, its library of third-party plugins and pre-built workflows is still small.

Camel: Research-Grade Massive Agent Simulation

Labels: "Research-Grade Production", "Academic & R&D Focused", "Requires Engineering Optimization"

Project URL: https://github.com/camel-ai/camel

Pros:

- Massive-Scale Agent Simulation: Camel can support simulations with millions of agents, making it an unparalleled tool for researching emergent behaviors and scaling laws in complex systems.

- Supports Dynamic, Stateful Interactions: It provides real-time communication and stateful memory, allowing agents to make multi-step decisions based on historical context, which is crucial for coherent, long-running tasks.

- Flexible and Modular by Design: It supports various agent types (role-playing, RAG-enhanced), task scenarios (data generation, automation), and model integrations, making it highly adaptable for interdisciplinary research.

- Advanced Data Generation and Self-Improvement: It integrates techniques like Chain-of-Thought (CoT) reasoning and self-instruction to automatically produce high-quality structured data and enables agents to self-optimize through reinforcement learning.

- Developer-Friendly and Well-Documented: Camel offers detailed documentation, code examples, and interactive tutorials (including Google Colab notebooks) to get developers up to speed quickly.

Cons:

- Extremely Resource-Intensive: Simulating millions of agents requires massive GPU/TPU resources, making it prohibitively expensive and energy-intensive for many.

- Complex to Debug at Scale: As the number of agents grows, the complexity of communication and task allocation explodes, making debugging and optimization incredibly difficult.

- Challenges in Evaluation and Security: There are currently no standardized methods for quantitatively evaluating emergent behaviors, and large-scale agent systems can pose unpredictable security risks.

CrewAI: Production-Ready Enterprise Automation

Labels: "Production-Grade Framework", "Enterprise Automation", "Dual-Mode Architecture", "Rapid Prototyping"

How it compares: CrewAI strikes a balance between ease of use and power. Compared to LangGraph, it's much faster to get started (you can build a multi-agent system in minutes) but offers slightly less flexibility for hyper-complex logic. It's far more mature than experimental frameworks like OpenAI Swarm.

Deployment advice: For simple automation, CrewAI's enterprise version (with Salesforce/SAP integration) is a great choice. For more complex needs, combine its Crews+Flows model with a framework like LangGraph for distributed scheduling.

Project URL: https://github.com/crewAIInc/crewAI

Pros:

- Unique Dual-Mode Architecture: Crews and Flows: It combines autonomous agent teams ("Crews") with event-driven processes ("Flows"). This allows for both high-level autonomous decision-making and fine-grained control over business logic, all while keeping the code clean and production-ready.

- Designed for Production-Grade Automation:

Flowsprovide secure state management, conditional branching, and Python code integration, enabling precise orchestration of complex business scenarios. With over 100,000 certified developers, it's becoming a standard for enterprise automation. - Lean, Independent, and Efficient: Built from the ground up without relying on LangChain, CrewAI offers a more efficient native API for agent management. Recent versions have also enhanced toolchain integration, allowing direct calls to production code.

- Role-Based Agents for Clear Specialization: Agents are designed with clear goals, specialized knowledge, and specific tool permissions. This supports dynamic task delegation and makes it easy to build specialized teams for tasks like market analysis.

- Superior Developer Experience: It provides a visual process orchestration tool (

flow.plot()) and intuitive decorators (@listen) for event handling, making it easier to implement complex workflows than with many alternatives.

Cons:

- Dual Architecture Adds Complexity: Mastering both the autonomous

Crewsand the controlledFlowssteepens the learning curve. Poorly designed flows can easily lead to agent decision conflicts. - Debugging Can Be Challenging: While console logging has improved, tracking flow states often requires third-party tools like Prometheus. The open-source version lacks enterprise-grade audit logging.

- Rapid Iteration Can Lead to Breaking Changes: The framework is evolving quickly, and early APIs have been unstable. Documentation sometimes lags behind code updates.

- Resource-Hungry at Scale: Running large-scale Crews (100+ agents) is memory-intensive and typically requires a containerization solution like Kubernetes for elastic scaling.

AutoGen: Microsoft's Conversational AI Agent Framework

Labels: "Production Framework", "Ideal for Complex Task Development", "Requires a Technical Team", "Enterprise Automation Solution"

How it compares: In contrast to CrewAI's explicit role-based agent design, AutoGen focuses on creating conversational agents that solve tasks through dynamic, multi-turn dialogue. This makes it highly flexible for complex problem-solving where the path to a solution is not predefined.

Project URL: https://github.com/microsoft/autogen

Developed by Microsoft, AutoGen is a powerful framework for creating applications with conversational agents.

Pros:

- Powerful Multi-Agent Conversations: AutoGen excels at creating multiple agents that collaborate to handle complex tasks, automating processes like code generation, testing, and decision optimization through a conversational framework.

- Flexible, Multi-Provider LLM Support: It's compatible with all major LLMs (OpenAI, Anthropic, Google Gemini) and supports both Azure cloud services and local open-source model deployment.

- Seamless Code Generation and Execution: Highly optimized for software development, it provides code template customization, automatic error correction, and cross-language support to dramatically boost developer productivity.

- Essential Human-in-the-Loop Capabilities: It allows developers to intervene at critical decision points, providing a crucial balance between full automation and manual control.

- Scalable for Enterprise Automation: With support for containerized deployment and complex task decomposition, AutoGen is well-suited for building scalable, enterprise-grade automation systems.

Cons:

- Steep Learning Curve: Getting the most out of AutoGen requires a solid understanding of multi-agent architecture and conversational programming, making it challenging for newcomers.

- High Resource Consumption: Running multiple agents in parallel is computationally expensive, and managing the costs of local deployment can be complex.

- Scalability Can Be Constrained by LLMs: When handling enterprise-scale tasks, the framework can run into limitations imposed by model token limits and context windows.

- Output Quality Depends Heavily on Templates: The quality of the generated code is highly dependent on the initial templates and prompts. Customizing them for specific domains requires significant effort.

- Debugging Agent Interactions is Tough: Pinpointing the source of an error within a complex, multi-agent conversation is notoriously difficult and requires specialized debugging skills.

How to Choose the Right Multi-Agent AI Framework

Navigating the multi-agent AI landscape requires matching the tool to the task. Your choice should be guided by your project's complexity, scale, and your team's expertise.

- For Beginners (Tier 1): Start with OpenAI Swarm to grasp the fundamentals of agentic collaboration in a simple, stateless environment.

- For Prototyping (Tier 2): OpenAI's Agents SDK offers a Python-native experience for rapid development, while LangChain-Chatchat provides the essential tools for building the knowledge base your agents will need.

- For Production (Tier 3): Here, the choice depends on your specific needs. CrewAI offers a superb balance of ease-of-use and power for enterprise automation. AutoGen provides unmatched flexibility for complex, conversational problem-solving. MetaGPT excels at structured, end-to-end software development tasks, while Dify empowers teams with a low-code platform for rapid application delivery.

The right framework is a force multiplier, turning ambitious AI concepts into reality. Choose wisely, and start building.

Key Takeaways

• Evaluate multi-agent AI frameworks based on your project’s specific needs and goals.

• Explore the three tiers: Learning, Development, and Production for tailored solutions.

• Consider tools like CrewAI, AutoGen, and MetaGPT for effective implementation.