Jason Wei's Three Laws of AI: A Framework for the Future

"All tasks that can be verified will eventually be solved by AI."

"Intelligence will become a commodity. The cost and accessibility of acquiring knowledge or performing some kind of reasoning will approach zero in the future."

In a rare public appearance since joining Meta, Jason Wei, a former core researcher at OpenAI and a key author of the seminal Chain-of-Thought (CoT) paper, delivered a fascinating talk at the Stanford University AI Club.

Wei laid out a powerful AI framework for navigating the future of artificial intelligence, built on three core concepts he believes are crucial for 2025 and beyond:

- The Verifier's Law: This principle determines which dominoes will fall first, stating that the ease of training an AI to solve a task is directly proportional to how easily its solution can be verified.

- The Commoditization of Intelligence: This trend explains how breakthroughs scale and costs plummet, turning advanced reasoning and knowledge retrieval into a utility approaching zero cost.

- The Jagged Edge of Intelligence: This concept reveals the uneven and unpredictable timeline of AI's capabilities, where progress is marked by massive leaps in some areas and surprising stagnation in others.

While not explicitly a guide for founders, this AI framework offers critical insights for anyone building, investing, or working in the AI space.

About Jason Wei: Jason is currently a researcher at Meta's FAIR (Fundamental AI Research) lab. Prior to Meta, he was a core scientist at OpenAI, where he contributed to the o1 model and Deep Research products and co-authored the influential Chain-of-Thought paper.

Watch the Full Lecture: https://www.youtube.com/watch?v=b6Doq2fz81U

Let's start with a fundamental question: "How will AI actually change our world?"

Ask around, and you'll get wildly different answers. A friend in quantitative trading might say, "ChatGPT is a cool toy, but it can't touch the specialized work I do." Yet, a top AI researcher's response was stark: "We probably have two to three years of work left before AI can do our jobs."

This chasm in perspectives reveals just how uncertain the future of AI feels. This article breaks down Jason Wei's framework for making sense of it, exploring these three key trends in AI progress.

1. The Commoditization of Intelligence: Why AI Costs Are Plummeting

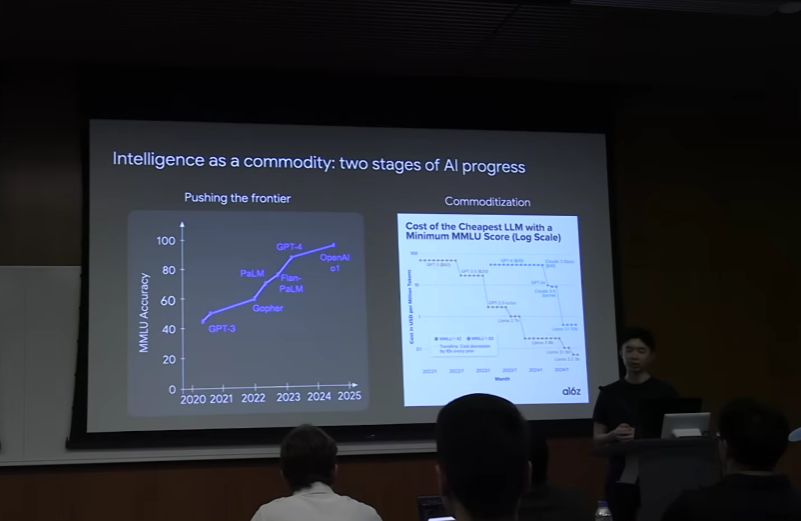

First, let's unpack the commoditization of intelligence. According to Wei, AI development unfolds in two distinct phases that explain the rapid drop in the cost of AI capabilities.

The Two Phases of AI Development

Phase one is pushing the frontier. This is the research-heavy stage where AI can't yet perform a task well, and scientists work to unlock that new capability. If you plot the performance of models on a benchmark like MMLU (Massive Multitask Language Understanding) over the last five years, you see this gradual, hard-won improvement.

Phase two is where it gets interesting: once a capability is unlocked, it rapidly becomes a commodity. Take a look at this chart. The Y-axis shows the cost (in USD) to achieve a specific performance level on MMLU. The trend is undeniable: the price of a certain "amount" of intelligence drops dramatically year after year.

What is Adaptive Compute?

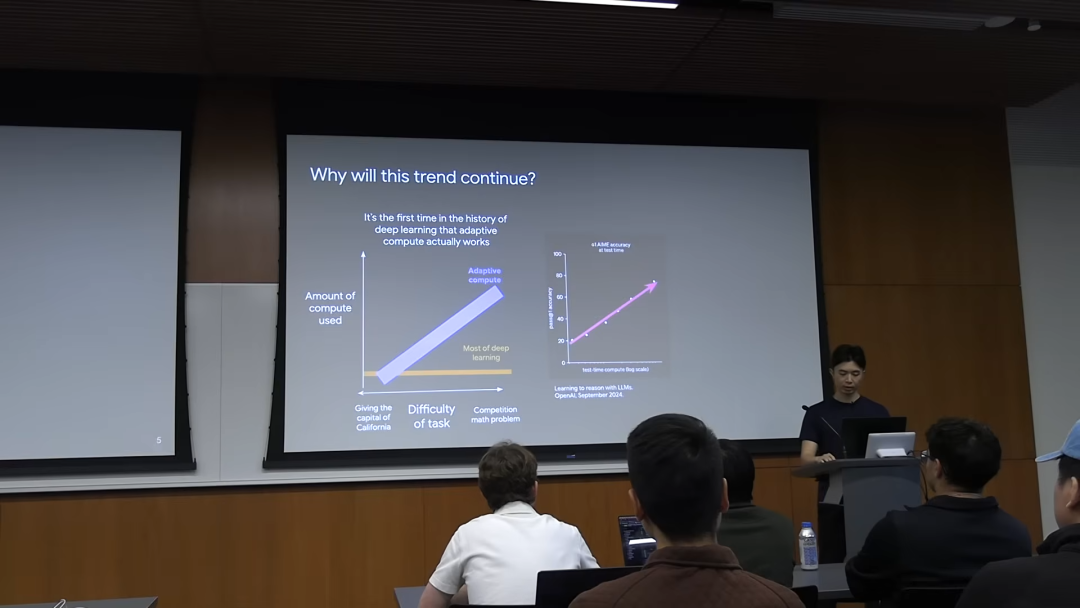

So, why is this happening, and why will it continue? For the first time in the history of deep learning, adaptive compute actually works. Until recently, the computational power used to solve a problem was fixed, whether you were asking "What's the capital of California?" or tackling a complex math Olympiad problem. That's incredibly inefficient.

We're now in the era of adaptive compute, where a model can dynamically allocate resources based on the task's difficulty. This means you don't always need a massive, expensive model; for simple tasks, you can use just enough compute to get the job done, driving the cost down. The o1 model, released over a year ago, was a major breakthrough here. It proved that by investing more compute at test time, you could significantly boost performance on difficult benchmarks.

The Evolution of Information Retrieval with AI Agents

The other side of this coin is that our access to public information is becoming instantaneous. Information retrieval has evolved through four eras: pre-internet, internet, chatbot, and now, the intelligent agent era. In each successive era, the time to find an answer has collapsed.

A complex query like, "How many couples got married in Busan in 1983?" once required digging through government archives. Today, an AI agent can solve it by navigating the Korean Statistical Information Service (KOSIS) database, figuring out the right queries, and extracting the answer.

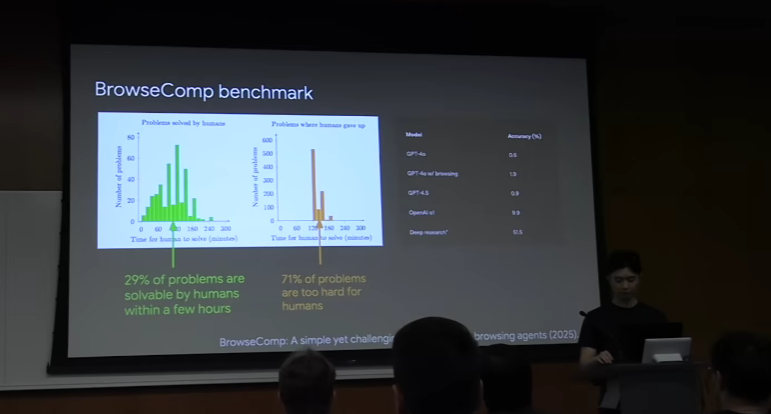

To quantify this, OpenAI developed a benchmark called BrowseComp, which contains questions with easily verifiable but time-consuming answers. As the chart shows, OpenAI's Deep Research model can already solve about half of them—a remarkable leap in AI capability.

Key Implications of AI Commoditization

- Cost Approaches Zero: Once AI masters a capability, the cost to use it will plummet. This trend is here to stay.

- Instant Knowledge: Any publicly available information will be at your fingertips, instantly.

- Democratization of Expertise: Fields once protected by knowledge barriers, like programming or personal health, will become radically more accessible.

- The Rise of Private Data: As public information becomes a free commodity, the value of private, internal, and proprietary data will skyrocket, becoming a key competitive advantage.

- Personalized Information Streams: We'll move from a shared, public internet to a personalized internet where the information you want finds you.

2. The Verifier's Law: If You Can Check It, AI Can Solve It

The second core idea in Jason Wei's AI framework is the asymmetry between solving and verifying. This is a classic concept in computer science: for many problems, it's far easier to check if a solution is correct than it is to find the solution in the first place.

Understanding the Asymmetry of Solving vs. Verifying

Consider these examples:

- Sudoku: Solving a hard puzzle is tough, but verifying a completed grid is trivial.

- Building a platform like X (formerly Twitter): This requires a massive team and years of work. Verifying if it works? Just open the app.

- Writing a "Factual" Article: It's easy to generate plausible-sounding text. Verifying each fact, however, can be an arduous task.

We can map tasks on a graph where the X-axis is the difficulty of generating a solution and the Y-axis is the difficulty of verifying it.

The key insight is that you can often do upfront work to make a hard problem much easier to verify, for example, by providing unit tests for a coding challenge.

What is the Verifier's Law in AI?

This brings us to the Verifier's Law. Wei's claim is this: The ease of training an AI to solve a task is proportional to the task's verifiability. In other words, any solvable task that is easy to verify will eventually be conquered by AI, making it a prime candidate for AI automation.

The 5 Components of Verifiability

"Verifiability" can be broken down into five key components:

- Objectivity: Is there a clear, undisputed right or wrong answer?

- Speed: Can you check a solution quickly?

- Scalability: Can you check millions of solutions automatically?

- Low Noise: Is the verification process reliable and consistent?

- Continuous Feedback: Does verification provide a nuanced score that indicates quality, not just a simple "pass/fail"?

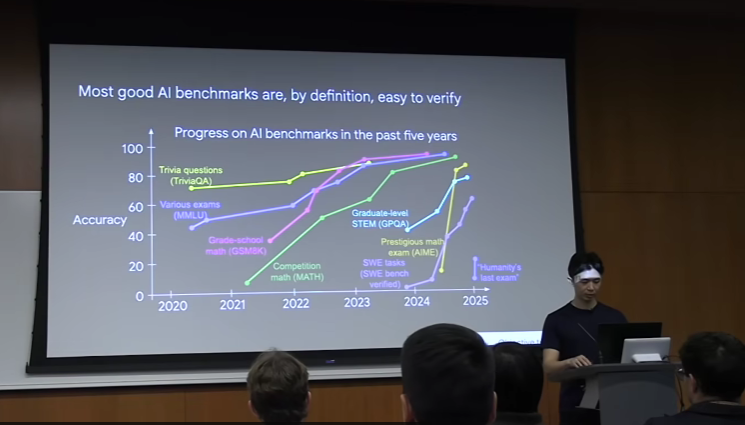

Most AI benchmarks are, by definition, highly verifiable. This is the Verifier's Law in action. Over the past five years, we've seen AI systematically crush benchmarks that fit these criteria.

AlphaDev: The Verifier's Law in Action

A brilliant real-world example of this principle is DeepMind's AlphaDev project. They tackled problems with high verification asymmetry—like optimizing sorting algorithms—by investing massive compute into an evolutionary search algorithm.

AlphaDev's strategy is elegant:

- Sample: Use an LLM to generate a massive number of potential code solutions.

- Grade: Because the task is highly verifiable (code correctness and speed can be tested automatically), every solution is scored instantly.

- Iterate: Feed the best-performing solutions back into the LLM as examples to generate even better solutions.

By running this loop, AlphaDev discovered algorithms superior to those designed by human experts over decades. It focused all its energy on finding the single best solution to one specific, verifiable problem.

3. The Jagged Edge of Intelligence: Why AI Progress is Unpredictable

Finally, let's talk about the jagged edge of intelligence. The discourse around AI's future is deeply polarized, but the reality of AI progress is more nuanced than a simple upward curve.

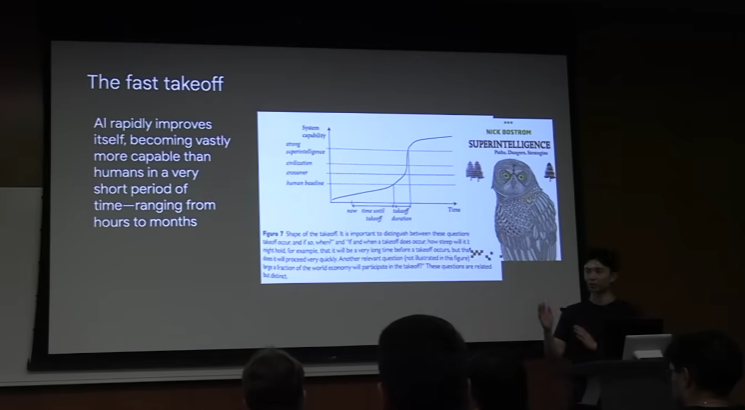

Debunking the "Fast Takeoff" Singularity Hypothesis

For years, a dominant hypothesis in AI has been the "fast takeoff" or "singularity." This theory suggests that once an AI becomes capable of self-improvement, it will trigger an explosive, recursive loop of intelligence growth.

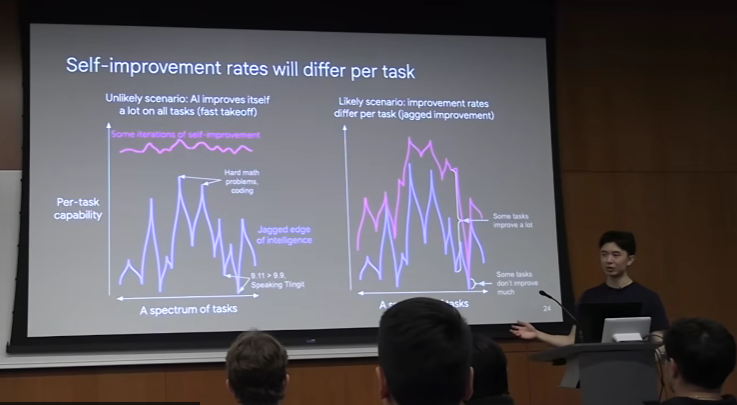

Wei argues this scenario is unlikely because AI self-improvement isn't a binary switch; it's a spectrum of capabilities that develops gradually. More importantly, the pace of improvement must be considered on a per-task basis. The landscape of AI capabilities looks more like a jagged mountain range than a smooth slope. There are "peaks" where AI excels and deep "valleys" where it fails on seemingly simple tasks.

How to Predict the Rate of AI Improvement

We won't see a single model master everything overnight. Instead, each task will improve at its own rate. Here are a few rules of thumb for predicting the rate of AI progress on a given task:

- Digital vs. Physical: AI is fundamentally better at digital tasks where iteration speed is near-infinite.

- Human Difficulty as a Proxy: Tasks that are easier for humans are generally easier for AI.

- Beyond Human Limits: AI can excel at tasks impossible for humans due to physiological limitations, like analyzing millions of images.

- Data is King: The more high-quality data available, the better AI will perform.

- Clear Metrics Unlock Self-Improvement: A clear objective metric enables reinforcement learning techniques for limitless self-training.

A Timeline for Future AI Capabilities

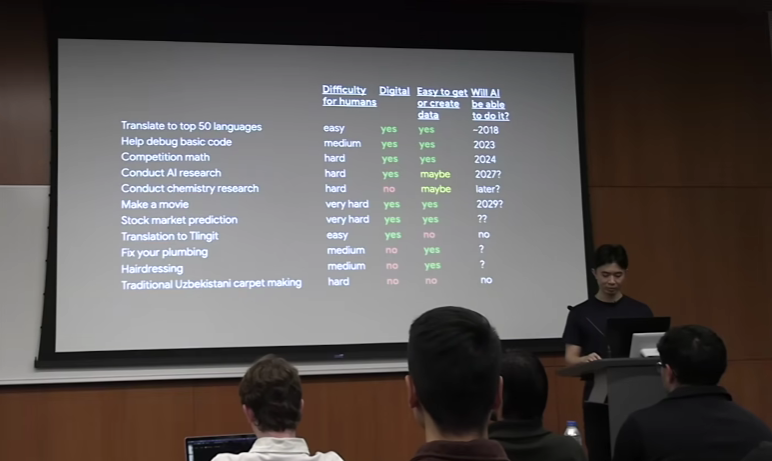

Using this AI framework, we can make some educated guesses about the timeline for various AI capabilities:

- Translate Top 50 Languages: Done. (Digital, tons of data)

- Debug Basic Code: Done (2023). (Digital, tons of data)

- Competition Math: Done (2024). (Digital, lots of data, highly verifiable)

- Conduct AI Research: Maybe 2027. (Digital, but data is hard to generate)

- Create a Feature Film: Maybe 2029. (Digital with lots of data)

- Predict the Stock Market: Who knows? (Extremely hard for humans)

- Translate Tlingit: Very unlikely. (Digital, but virtually no data)

- Fix Your Plumbing: A long way off. (Physical, limited data)

- Navigate Complex Social Negotiations: Extremely unlikely. (Requires deep emotional intelligence, not easily verifiable)

The key takeaway on the jagged edge of intelligence is that there will be no fast takeoff. The biggest impacts of AI will be on tasks that are digital, data-rich, and verifiable. This means some fields, like software development, are being completely transformed, while others, like skilled trades, may remain largely untouched for decades to come.

Key Takeaways

• Verifier's Law emphasizes that verifiable tasks will ultimately be automated by AI.

• Expect intelligence to become a low-cost, widely accessible commodity in the future.

• The Jagged Edge concept highlights the nuanced challenges AI will face in its evolution.